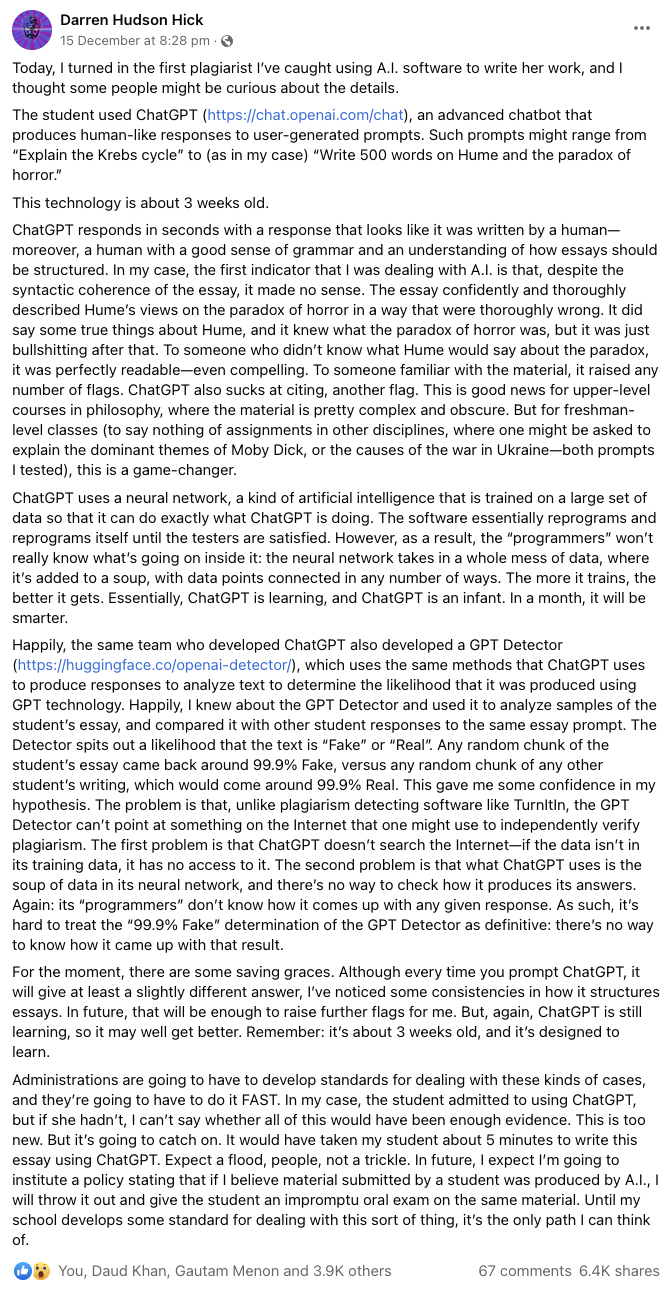

I just read this immensely interesting piece on a teacher catching out a student who had submitted an AI-generated report using ChatGPT and passed it off as their own creation. He did this by using another software built by the same people who made ChatGPT and which uses the same algorithm to calculate the possibility of the piece of work submitted for its investigation having been AI-generated. How fascinating!

Imagine a future where original-content-producing-AI and AI-produced-content-detecting-AI are playing cat & mouse in a AI arms race as we humans lose all sense of what is real and what is ‘fake’. Indeed, I wish to ask, what IS fake? How do you define something to be ‘real’ and, conversely, a ‘fake’?

Just because a poem is written by an AI does not make it less…erm…’poemy’. A fictional story or novel or even a factual news report or a thesis or a research paper written by an AI, if it passes all parameters set for the genre, is, would, and should be indistinguishable from the same written/produced by a human. Maybe the only skill that might be in demand in the future is to have the originality and talent of writing down the input the AI receives to create original, entertaining, and engaging content. That is until someone writes an AI-powered engine to do that!

But, you’d argue, there will still be a requirement for humans to produce original stuff, right? Like when one is to be assessed for knowledge or skill. In examinations and evaluations. In schools and universities. In job interviews and appraisals. In trying to understand how much that particular human knows.

I think that would pose more and more problems as we go along. Because it would become more and more difficult, and eventually impossible, to separate ‘AI’ from ‘human’. Indeed, once we start building AI into our own lives, and eventually medical science uses it to be incorporated as a prosthetic for our cognitive functions, who is to really say what is ‘human’ and what is ‘not human’? With genetic editing cleaning our systems up of any diseases, present and future, our faulty internal organs replaced by nanomachines powered by nuclear fusion, and our intellect and cognition enhanced by AI, all of which will be part of us and different from the external machines and implements helping our daily lives, at what stage can you clearly draw a line to claim that on this side lies ‘human’ and on that, ‘non-human’?

Also, from a perspective of consumption (and hence, the consumers), what does it matter if the report or the poem or the novel was written by a human or a machine? As long as I get the value for what I paid, I should not care, right? Initially, I can see us being a little queasy over the origin of what we are buying, and perhaps there will be movements that will insist that products and services be labelled for a clear a priori understanding of the buyer whether or not something is non-human-produced (like the silly GMO label movement that is ongoing at present), but this will soon die down as realisation will dawn upon humanity that it would become impossible to draw that line.

You may say all of this is very far away (ChatGPT is not even a month old today). Indeed I did see some of my friends pooh-pooh ChatGPT and mock it derisively, saying that it is nowhere close to real intelligence to compete with human-produced content, and that this will take a long time to get to a place where it may become so. In response, I shall paraphrase a science author whose name escapes me at the moment (help me out with their name if you know it, please), but who said something along the lines of:

In matters of science & technology, if something seems 10 years away, it will probably happen much later than you expect; if something seems 100 years away, much sooner.